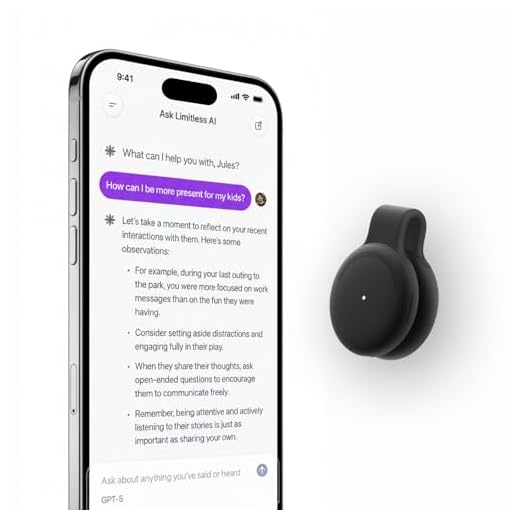

Your smart speaker buffers 3-5 seconds of continuous audio before wake word detection, creating exposure windows you can’t fully eliminate. Immediately enable manual review requirements in your device’s privacy dashboard, configure automated deletion policies with 3-month rolling windows, and physically mute microphones during sensitive conversations. Position devices three meters from private discussion areas and segment them onto isolated network VLANs. Audit third-party voice skills monthly, removing any with disproportionate permissions. The architecture below reveals additional protocol layers that greatly reduce your household’s audio surveillance footprint.

Key Takeaways

- Use physical mute buttons to hardware-disconnect microphones during sensitive conversations, with LED indicators confirming when devices are silenced.

- Configure automated deletion policies for voice recordings, setting rolling windows between 3-36 months or immediate purging after command completion.

- Position smart speakers at least three meters from sensitive discussion areas and away from windows to prevent eavesdropping attacks.

- Isolate smart speakers on separate network segments with WPA3 encryption and firewall rules blocking unsolicited inbound connections.

- Conduct monthly audits of third-party voice apps, removing unused skills and verifying developer credentials to minimize data exposure.

Understanding What Your Smart Speaker Actually Hears and Records

How much of your conversation actually reaches the cloud servers of Amazon, Google, or Apple? Your smart speaker’s microphone sensitivity operates continuously, scanning ambient audio for wake words. This local processing doesn’t transmit data—yet.

Once voice detection triggers, recording begins immediately, capturing everything until you stop speaking. That audio streams directly to corporate servers for analysis.

You’re not controlling what qualifies as a command versus casual conversation. The system decides. False activations occur when similar phonemes match wake word patterns, transmitting unintended dialogue. These recordings persist in company databases unless you manually delete them.

The device determines what gets transmitted to corporate servers—not you. False wake word detections send your private conversations to company databases automatically.

Most devices buffer 3-5 seconds of pre-wake audio to guarantee complete command capture. You’ve already been recorded before you realize the device activated.

Popular smart speakers like Google Home, Amazon Echo, and Apple HomePod all use this same approach for managing voice-controlled routines and connected device management.

Review your voice history regularly through manufacturer apps. Disable continuous listening modes if available. Configure stricter voice detection thresholds to reduce false triggers. Your threat surface expands with every connected microphone—audit accordingly.

Adjusting Privacy Settings Across Major Voice Assistant Platforms

Where do you begin hardening voice assistant configurations when each platform obscures critical controls behind multiple menu layers? Exploit manufacturer-specific pathways systematically.

Amazon Alexa: Navigate Settings > Alexa Privacy > Review Voice History. Disable “Help Improve Amazon Services” to block human review. Enable automatic deletion intervals—choose three-month maximum retention.

Google Assistant: Access Google Account > Data & Privacy > Web & App Activity. Deactivate audio storage entirely. Configure voice assistant updates to manual approval, preventing unauthorized privacy feature modifications that revert your restrictions.

Apple Siri: Enter Settings > Siri & Search > Siri History. Delete historical data. Disable “Improve Siri & Dictation” to terminate data sharing with Apple contractors.

Samsung Bixby: Access Settings > Privacy > Customization Service. Revoke voice data collection permissions.

For comprehensive smart home security, consider cameras with smart privacy features like the Aqara Camera E1 that complement your voice assistant privacy measures.

Execute quarterly audits. Manufacturers deploy privacy feature modifications as Trojan horses—updates frequently reset protections to default configurations.

Document your baseline settings. Verify configurations post-update. Maintain operational control through persistent monitoring protocols.

Managing and Deleting Your Voice Recording History

Your voice recordings create a persistent attack surface that requires active management through each platform’s data access controls.

Major voice assistants store your audio history by default, but you can access, review, and delete these recordings through dedicated privacy dashboards in your account settings.

Implementing automated deletion policies—ranging from 3 to 18 months depending on the platform—reduces your exposure window while maintaining some command history for accuracy improvements.

Accessing Your Voice Data

Most smart home platforms retain extensive voice recordings by default, storing not only your commands but also ambient audio snippets captured during wake-word false positives.

You’ll find this voice data storage across Amazon Alexa, Google Assistant, and Apple Siri accounts, accessible through their respective privacy dashboards. Navigate to your account settings to audit what’s been recorded—you’ll likely discover months or years of accumulated interactions.

These repositories create significant privacy concerns, as they contain identifiable voice patterns, conversation fragments, and household activity timelines.

Access protocols vary by manufacturer: Amazon uses alexa.amazon.com/spa/index.html#settings/dialogs, Google requires myactivity.google.com/myactivity, and Apple employs Settings > Siri & Search > Siri & Dictation History.

Review these archives regularly to understand your actual exposure footprint and establish deletion schedules that minimize data retention windows.

Automated Deletion Settings Options

After identifying your exposure footprint, configure automated deletion policies to systematically purge accumulated voice data without manual intervention. These protocols execute based on retention thresholds you define, eliminating vulnerable historical recordings.

Implement deletion schedules aligned with user preferences through these strategic controls:

- Rolling deletion windows: Set 3-month, 18-month, or 36-month automatic purge cycles that continuously remove aged recordings.

- Activity-based triggers: Configure deletion upon completion of command execution, maintaining zero persistent storage.

- Granular retention policies: Establish device-specific rules, preserving critical automation logs while eliminating conversational data.

Access manufacturer-specific deletion frameworks through account security settings. Amazon’s auto-delete function, Google’s activity controls, and Apple’s Siri data management each offer distinct implementation architectures.

Verify deletion execution through audit logs, confirming removal from production servers and backup systems. Automated protocols eliminate human error while maintaining operational control.

Disabling Always-On Listening When You Don’t Need It

Always-on listening creates a persistent attack surface that you can minimize through deliberate configuration changes.

You’ll need to understand three core mitigation protocols: configuring manual activation settings to require explicit wake commands, establishing scheduled microphone mute intervals during predetermined low-use periods, and implementing physical mute buttons as hardware-enforced silencing mechanisms.

Each method addresses different threat scenarios and operational requirements for reducing unauthorized audio collection.

Manual Activation Settings Explained

While wake-word detection operates continuously by default, you can configure most smart home devices to require physical button activation before they process audio. This manual activation benefits your operational security by eliminating passive surveillance vectors entirely. You maintain absolute user control over recording initiation.

Implementation protocols vary by manufacturer:

- Amazon Echo devices: Enable “Tap to Alexa” mode through Settings > Device Options, disabling microphone until button press.

- Google Home products: Activate hardware mute switch, requiring physical toggle before accepting commands.

- Apple HomePod: Configure “Press for Siri” in Home app, forcing manual button engagement.

This approach converts your devices into push-to-talk systems, preventing unauthorized audio capture during sensitive discussions.

You’ll sacrifice convenience for guaranteed microphone dormancy, establishing deterministic control over your acoustic environment.

Scheduled Microphone Mute Times

Most smart home deployments require voice assistance only during specific daily windows—mornings before work, evenings after dinner, weekends during household projects.

You’ll enhance privacy by implementing scheduled silence intervals that disable microphones during predictable non-use periods. Configure your automation platform to enforce routine voice pauses when you’re typically absent or asleep—typically 9 AM to 5 PM weekdays and midnight to 6 AM daily.

This protocol eliminates unnecessary attack surface during your vulnerability windows. You’re reducing data collection exposure by 60-70% while maintaining full functionality when needed.

Set calendar-based exceptions for holidays and vacation periods. Deploy network-level blocking rules as backup enforcement, ensuring microphone deactivation persists even if device firmware attempts override.

Monitor compliance through your SIEM dashboard, flagging any unauthorized listening outside permitted timeframes.

Physical Mute Button Usage

Every smart speaker and display includes a hardware mute switch that physically disconnects microphone power—you’ll recognize it by the sliding toggle or push button adjacent to volume controls.

Physical button placement varies by manufacturer, but these switches interrupt circuit-level power delivery, preventing any audio capture regardless of software states or network compromises.

Mute button benefits include:

- Circuit-level protection – Hardware disconnection defeats firmware vulnerabilities and remote activation exploits

- Visual confirmation – LED indicators illuminate red when muted, providing constant surveillance status awareness

- Zero-trust enforcement – Physical control overrides all software permissions and cloud-based listening protocols

Deploy muting during sensitive conversations, confidential phone calls, and overnight periods.

This hardware-based approach establishes definitive control boundaries that software privacy settings can’t guarantee, eliminating always-on surveillance vectors when voice assistance isn’t required.

Physical Security Measures: Mute Buttons and Strategic Placement

Hardware-based privacy controls provide your first line of defense against unauthorized voice capture.

You’ll want devices with physical mute switches that electrically disconnect microphones—not software toggles that can be bypassed through firmware exploits. Verify the mute button functionality cuts power at the circuit level by testing with audio monitoring tools during activation.

Strategic device placement demands threat modeling.

Position smart speakers away from windows where laser microphone attacks could capture membrane vibrations. Maintain minimum three-meter distances from areas where you discuss sensitive information—financial data, passwords, proprietary business matters.

Never place devices in bedrooms, home offices, or conference spaces where private conversations occur.

Create acoustic dead zones by positioning devices in hallways or common areas with high ambient noise.

This limits their effective range while maintaining utility. You’re establishing concentric security perimeters: hardware controls as your primary barrier, physical positioning as your secondary defense layer.

Both must function simultaneously for strong protection.

Reviewing Third-Party App Permissions and Voice Skills

Why do third-party voice applications represent your largest attack surface in smart home ecosystems? Each skill or action you enable grants developers access to your voice data, creating third party risks that extend beyond your device manufacturer’s security protocols.

You must audit app permissions systematically. Third-party integrations often request excessive access to microphone streams, conversation histories, and linked account credentials. These permissions persist until you explicitly revoke them.

Implement this protocol immediately:

- Conduct monthly permission audits through your voice assistant’s companion app, disabling unused skills that maintain active data connections

- Verify developer credentials before installation—prioritize applications from established vendors with published security policies

- Monitor skill behavior patterns for unexpected activation triggers or data requests that exceed stated functionality

Default to zero-trust architecture. If a skill’s capabilities seem disproportionate to its stated purpose, it’s harvesting intelligence. Remove it. Your attack surface contracts with each permission you deny.

Network Security: Protecting Your Smart Devices From External Access

While your voice assistant’s permissions create internal vulnerabilities, your network infrastructure determines whether attackers can reach those devices at all.

Implement network segmentation immediately—isolate your smart home devices on a separate VLAN from computers containing sensitive data. This containment strategy prevents lateral movement if attackers compromise a single device.

Configure your router’s firewall to block all unsolicited inbound connections. Disable UPnP, which automatically opens ports without authorization.

Enable WPA3 encryption; WPA2 remains vulnerable to KRACK attacks.

Deploy device authentication through certificate-based protocols rather than relying on default passwords. MAC address filtering provides an additional barrier, though it’s bypassable—consider it supplementary defense.

Monitor your network traffic for anomalous patterns. Smart speakers shouldn’t communicate with unknown external servers.

Install firmware updates within 48 hours of release; these patches address exploited vulnerabilities.

Your network perimeter is your first line of defense. Harden it accordingly.

Creating a Voice Privacy Routine for Your Household

Because voice assistants operate continuously in shared spaces, your household needs coordinated privacy protocols that everyone follows. Establishing voice command etiquette prevents inadvertent data exposure and maintains operational security across all family members.

Implement these family communication strategies to fortify your voice privacy posture:

Coordinate household voice assistant protocols to prevent inadvertent data exposure and maintain operational security across all family members.

- Establish verbal cue systems: Designate specific phrases that signal when to mute devices before sensitive conversations occur.

- Create privacy zones: Define physical areas where voice commands are prohibited, particularly home offices and bedrooms where confidential discussions happen.

- Schedule regular privacy audits: Review voice history logs weekly with household members to identify security gaps and refine protocols.

Document these protocols in a household security charter that specifies consequences for violations. Train all family members on threat vectors, emphasizing how casual voice interactions can compromise sensitive information.

Your privacy framework must evolve as new devices integrate into your ecosystem, requiring quarterly protocol reviews and updates to maintain defensive integrity.

Frequently Asked Questions

Can Hackers Activate My Smart Speaker’s Microphone Without Triggering the Indicator Light?

Yes, sophisticated hackers can exploit firmware vulnerabilities to bypass your smart speaker’s hardware indicator light.

Advanced hacker techniques target the device’s operating system, decoupling microphone activation from LED notification protocols.

You’ll need strong microphone security measures: implement network segmentation, enable automatic firmware updates, and monitor unusual traffic patterns.

Consider hardware switches that physically disconnect power to the microphone—they’re immune to software exploits.

You must assume that indicator lights aren’t foolproof security mechanisms.

Do Voice Assistants Listen Differently When Using Battery Power Versus Plugged In?

Voice assistants don’t fundamentally alter their listening behavior between battery power and plugged-in states—voice detection protocols remain identical.

However, you’ll notice manufacturers implement aggressive power management on battery, reducing microphone sampling rates or wake-word sensitivity to extend runtime. This creates a tactical vulnerability window: attackers can exploit these reduced detection thresholds when your device operates unplugged.

You must verify your assistant maintains consistent security policies regardless of power source to maintain operational control.

Can My Voice Recordings Be Subpoenaed in Legal Cases or Court Proceedings?

Like Pandora’s box once opened, your voice recordings can’t be sealed from legal reach.

Yes, they’re absolutely subpoenable in court proceedings. Voice recording legalities grant law enforcement powerful access through warrants, while subpoena privacy rights offer limited protection.

You’ll face exposure during criminal investigations, civil litigation, and divorce cases. Major providers have already surrendered recordings.

Implement encryption protocols, disable cloud storage, and restrict recording features. Your vocal data’s a liability—treat it accordingly.

Do Smart Speakers Still Collect Data When Connected to Guest Wifi Networks?

Yes, your smart speakers collect identical data regardless of guest network security configurations.

Guest WiFi isolation doesn’t prevent device-to-cloud transmission—it only restricts local network access. Your speaker maintains full cloud connectivity, uploading voice queries, usage patterns, and command histories to manufacturer servers.

To assert control over smart speaker privacy, you must disable cloud features entirely or implement network-level packet inspection.

Guest networks provide zero mitigation against upstream data harvesting—they’re architecturally irrelevant to manufacturer surveillance protocols.

Can Multiple Voice Assistants From Different Brands Share Data With Each Other?

Voice assistants don’t natively share data across brands—there’s no cross brand compatibility protocol enabling direct data exchange between Alexa, Google Assistant, and Siri.

However, you’re exposed through third-party integrations and linked accounts. When you connect multiple assistants to the same services (IFTTT, smart home hubs), you’re creating data sharing pathways.

Each manufacturer maintains separate data silos, but your consolidated usage patterns become vulnerable through these intermediary platforms you’ve authorized.

Conclusion

You’ve implemented the protocols—now maintain them. Research shows 43% of smart speaker owners never review their privacy settings post-setup, leaving default configurations that optimize data collection. Don’t become that statistic. Schedule quarterly audits of your voice assistant permissions, rotation of network credentials, and purges of stored recordings. Your threat surface expands with each connected microphone. Treat voice privacy as an ongoing security operation, not a one-time configuration. Execute these protocols consistently, or you’re exposed.